Explore the rise of ai narration: why neural voices sound more human than ever

The change in AI narration wasn't a slow crawl; it was a quantum leap. Modern neural voices sound so human because they've been trained on immense libraries of actual human speech, allowing them to finally grasp the subtle rhythms, tones, and emotions that machines could never replicate before. This turns them from simple text readers into genuinely compelling storytellers.

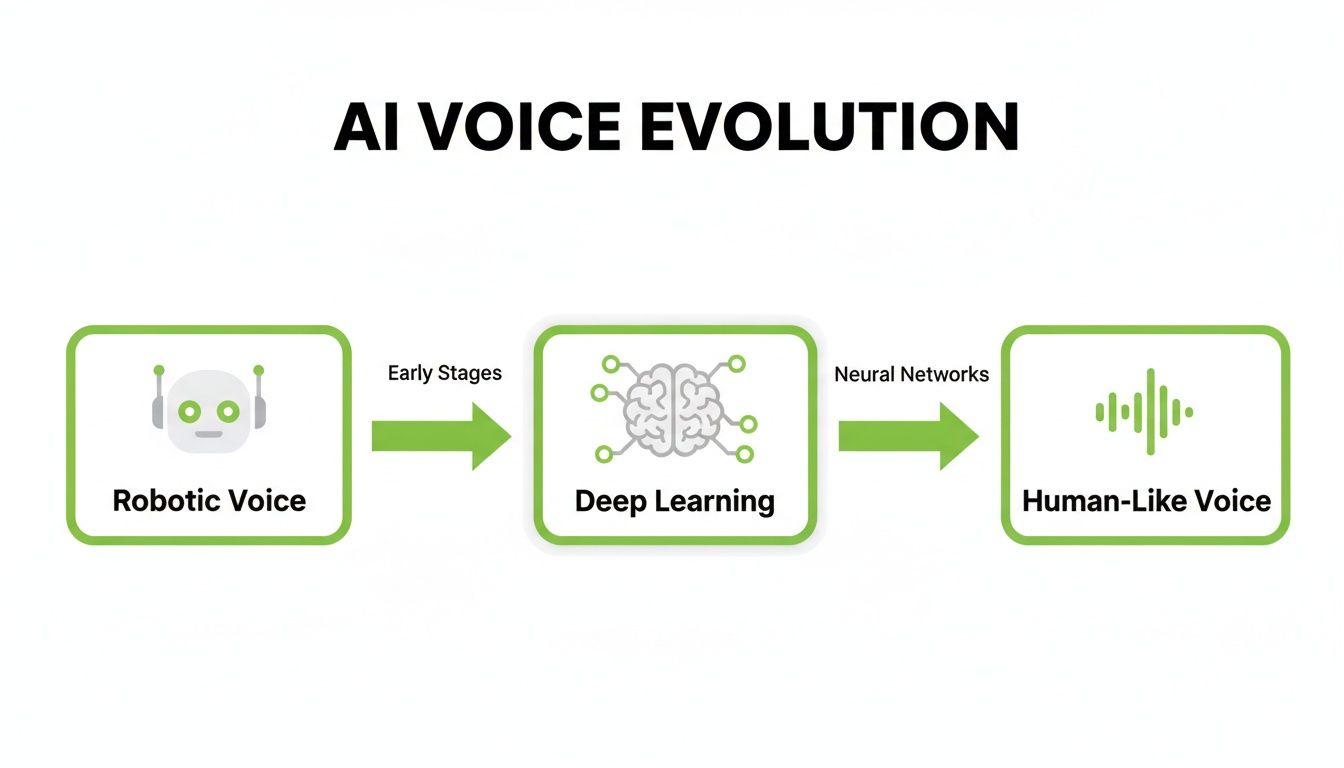

From Robotic Monotone to Human-Like Emotion

Think back to the clunky, robotic text-to-speech voices from early GPS devices or automated phone menus. For years, synthetic speech was defined by its flat, monotone delivery and weird pacing—a dead giveaway you were listening to a machine.

That era is officially over. Today, we're in the middle of a massive shift driven by AI narration. Neural voices aren't just understandable anymore; they're genuinely engaging and can even convey emotion.

This incredible change didn't just pop up overnight. It's the product of huge advancements in deep learning and our ability to feed these models enormous datasets of human speech. Instead of just clumsily stitching pre-recorded words together, modern AI actually learns the fundamental patterns of how we communicate. It studies not just what we say, but how we say it.

Understanding this "music of speech" is key to seeing how AI finally broke free from its monotone prison. It's what allows the technology to inject natural rhythm, stress, and intonation into its narration. To really get a handle on this, you can explore the crucial role of prosody in speech.

The Engine of Change

The market numbers tell the story loud and clear. The AI voice generator market has gone from a niche for robotic voices to a booming industry for hyper-realistic narration. It exploded in value from around USD 4.9 billion to a projected USD 6.40 billion in just a single year. And it's not slowing down—projections show it rocketing to USD 54.54 billion by 2033, growing at a blistering 30.7% compound annual rate.

This meteoric rise is all thanks to breakthroughs in neural text-to-speech (TTS) technology. Deep learning models have completely transformed the output from lifeless, robotic speech to voices that capture human emotion and nuance with uncanny accuracy.

For content creators, especially those in the fast-moving world of short-form video, this technology is a total game-changer. What felt like science fiction just a few years ago is now a practical tool that solves very real problems.

The key difference today is that AI no longer just reads words; it interprets context. This allows it to generate speech with the subtle pauses, emphasis, and emotional tone that makes narration captivating, turning a simple script into a powerful story.

This shift is fueling the content boom on platforms like TikTok and YouTube Shorts, where a great voiceover is essential for stopping the scroll. It lets creators produce consistent, high-quality audio without needing expensive recording gear or feeling the pressure of recording their own voice.

Let's break down the core advancements that made this possible.

Core Advancements in Lifelike AI Narration

This table sums up the key technological shifts that allow modern AI voices to sound so convincingly human.

| Advancement | What It Means in Simple Terms | Impact on Realism |

|---|---|---|

| Deep Learning Models | Instead of rules, AI learns from massive audio examples, like a student learning a language by listening. | Voices sound less programmed and more natural, with human-like imperfections. |

| Massive Training Data | Models are trained on thousands of hours of diverse human speech, accents, and emotional tones. | AI can produce a huge range of voice styles, from calm and authoritative to excited and emotional. |

| Prosody Conditioning | The AI learns the "music" of speech—rhythm, pitch, and stress—not just the words themselves. | Narration has a natural flow and cadence, avoiding the classic robotic, flat delivery. |

| Real-Time Adaptation | The system can adjust its delivery based on the context of the words, making it sound more conversational. | Pauses and emphasis are placed correctly, making the speech feel more dynamic and aware. |

These breakthroughs work together, creating an experience that's often hard to distinguish from a human speaker. The result is a powerful new tool for anyone looking to create engaging content.

How AI Learned to Speak with Feeling

The leap from robotic, choppy speech to the smooth narration we hear today wasn't just a minor tweak. It was a complete overhaul in how machines learn to talk. For a long time, text-to-speech systems were essentially audio puzzles, piecing together pre-recorded sounds of syllables and words. The result? Speech that was understandable but totally flat, emotionally empty, and just… awkward.

Modern AI narration threw that old rulebook out the window and embraced deep learning. Instead of being fed a list of grammar rules, these new neural networks are more like apprentice actors. They listen to thousands of hours of real human speech, absorbing not just the words but the way we say them. This is the secret sauce behind why AI voices now sound so convincingly human.

From Predicting Sound to Understanding Context

One of the first major breakthroughs came from models like WaveNet, which learned to build audio from the ground up, one soundwave at a time. Picture a musician who can hear the first few notes of a song and just knows what comes next. By analyzing the raw audio itself, these models learned the tiny, seamless transitions that make our voices flow naturally.

But just predicting the next sound isn't enough. To really speak with feeling, an AI needs to grasp the meaning of an entire sentence. That's where Transformer models changed the game.

Transformers can look at a whole sentence at once, giving them a "big picture" view. This lets them figure out which words to emphasize and where to place a natural pause. It's how an AI can tell the difference between "I didn't say he stole the money" and "I didn't say he stole the money." The context is everything.

This shift from clunky, robotic speech to nuanced, human-like narration is a direct result of these deep learning advancements.

As you can see, deep learning is the critical engine that turned disjointed sounds into speech that's fluid, coherent, and can actually connect with us.

The Power of Data and Prosody

Ultimately, an AI voice is only as good as the data it’s trained on. If you teach a model with a few hours of flat, boring audio, you’re going to get a flat, boring voice. It's that simple.

But when you feed that same model a massive, diverse library of audio—think different speakers, accents, emotional tones, and speaking styles—it starts to develop a deep, sophisticated understanding of how we communicate. It's not just learning words; it's learning the art of prosody.

Prosody is the music of our speech. It's the rhythm, pitch, stress, and intonation that pack our words with meaning and emotion. It’s what makes a question sound like a question and sarcasm sound like sarcasm.

By getting a handle on prosody, AI can now deliver lines with the timing and emotional punch of a real person. This incredible ability is built on a few key pillars:

- High-Fidelity Audio: The training data has to be pristine. We're talking clean, high-quality audio from professional studios, so the AI doesn't learn to replicate background hum or microphone pops.

- Expressive Variety: To sound human, the AI needs to understand human emotions. The dataset must include a huge range of expressions—from excited and happy to serious and somber—giving the AI a full emotional palette.

- Linguistic Diversity: Including various accents and dialects helps the model grasp the incredible variety in human speech, making its own voice more flexible and natural.

When all these elements come together, you get a system that doesn't just copy human speech—it generates it from a place of deep, contextual understanding. The result is a voice that can do more than just read a script; it can engage, persuade, and connect with an audience on a truly human level.

Moving Past the Uncanny Valley

For years, AI voices were stuck in a weird, uncomfortable place—the "uncanny valley." You know the feeling. Something is almost human, but a few subtle flaws make it feel off, even a little creepy. Early text-to-speech was exactly like that. The words were there, but the rhythm was all wrong, the pitch was flat, and any hint of real emotion was completely missing. It was just… unnerving.

But today's neural voices have finally started to climb out of that valley. It wasn't one single "aha!" moment, but rather a ton of tiny, intricate details coming together to create something that feels genuinely alive. These are the finishing touches that take AI narration from something you can merely understand to something you can actually feel.

This incredible leap forward is why the AI voice market is predicted to explode from USD 4.16 billion to a staggering USD 20.71 billion by 2031. That's a compound annual growth rate of 30.7%. Just think back to the clunky, rule-based TTS of the early 2010s. Now, new models can mimic intonation, pauses, and emotional shades so well that it's often hard to tell them apart from a real person. You can dig into the numbers behind this growth in this detailed industry analysis on MarketsandMarkets.com.

The Subtle Art of Sounding Human

So, what are these game-changing details? It’s about so much more than just pronouncing words correctly. The magic is in recreating all the subconscious things we do when we speak without even thinking about it.

- Realistic Breathing: We don't talk in one long, unbroken stream. We have to breathe. Advanced neural voices now weave in those subtle inhalations and exhalations, which instantly makes the delivery feel more natural and less like a machine that never runs out of air.

- Natural Pauses: Sometimes, the silence between words says more than the words themselves. AI has learned to use pauses to build tension, signal a shift in thought, or just let an important point land. This gets rid of that relentless, machine-gun delivery that plagued older systems.

- Filler Words and Imperfections: Here's a fun paradox: perfect speech sounds fake. Real people hesitate. We say "um" or "ah." Some of the most sophisticated AI voices can now sprinkle in these little disfluencies, which, ironically, makes them sound far more human and authentic.

All these small elements work together to close the gap between artificial and authentic. It creates a listening experience that feels comfortable and engaging instead of jarring and strange.

Directing the AI with Prosody Conditioning

One of the most powerful new tools in our belt is something called prosody conditioning. The best way to think about it is like being a film director giving instructions to an actor. Instead of just handing the AI a script, you can now tell it how to deliver the lines.

Prosody conditioning is all about guiding the AI’s emotional delivery. You can specify a tone—like excited, calm, or somber—and the model will adjust its pitch, speed, and intonation to match that feeling.

This level of control is huge for creators. You can make sure the narration for an action-packed video sounds energetic and fast-paced, while the voiceover for a guided meditation is gentle and soothing. The AI is no longer just a text-reader; it’s a versatile voice actor that can adapt to the mood of any project. This is a must-have for any AI story creator who wants to connect with their audience on an emotional level.

The results are so convincing that, in recent studies, listeners often can't reliably tell the difference between a top-tier neural voice and a recording of a human. This is the moment AI narration truly crossed the uncanny valley, evolving from a functional tool into an emotionally resonant one.

Putting AI Narration to Work in Your Videos

Knowing the theory behind neural voices is interesting, but putting that technology to work is where things get exciting. For most video creators, the biggest headaches aren’t about finding great ideas—they’re about the technical grind. Mic fright, inconsistent audio, and the endless hours spent editing out every little mistake can bring production to a screeching halt.

AI narration steps in to solve these exact problems. It gives you a consistent, high-quality audio source that’s ready to go 24/7. This takes the pressure off performing perfectly and frees you from needing expensive recording gear. It’s a huge shift that makes video production more accessible, letting creators focus on what really matters: the message.

The demand for this has exploded alongside short-form video. The voice AI infrastructure market is expected to rocket from USD 5.4 billion to an astounding USD 133.3 billion by 2034, growing at an incredible 37.8% CAGR. As neural voices become nearly identical to human ones, they're set to dominate the voiceover market, with some projections showing them capturing nearly 49.6% of the text-to-speech space. Platforms like TikTok and YouTube Shorts need fast, scalable audio, and AI is the answer.

Getting the Perfect Narration

You can't just paste a script into a text-to-speech engine and expect magic. To get truly great results, you have to approach it with a little more intention. Think of the AI as a voice actor you’re directing.

Here are a few practical tips to get you started:

- Choose a Voice That Fits Your Brand: Don't just settle for the default. Is your brand serious and educational, or is it upbeat and fun? Look for a platform like MotionLaps that offers a library of voices categorized by style, like "Documentary Narrator" or "Energetic Influencer," and pick one that matches your content’s personality.

- Write for the Ear, Not the Eye: Scripts should be written to be spoken aloud. That means using shorter sentences and simpler words. Before you generate anything, read your script out loud to yourself. You'll immediately catch any awkward phrasing or clunky sentences.

- Use Punctuation as Your Director: Punctuation is how you control the AI’s performance. Commas create small pauses, giving the listener a moment to breathe. Periods create longer, more final stops. Use them strategically to manage the rhythm and pacing for a much more natural feel.

Comparing Traditional Voiceover with AI Narration

To really understand the impact of AI narration, it helps to see how the workflow stacks up against traditional methods. The table below breaks down the key differences in time, cost, and effort.

| Process Step | Traditional Voiceover (Hired or DIY) | AI Narration |

|---|---|---|

| Scripting | Script is written for a human to read. | Script is written and fine-tuned with punctuation for AI delivery. |

| Recording | Requires a quiet space, microphone, and multiple takes. | No recording needed. Script is pasted into the tool. |

| Editing | Hours spent removing mistakes, breaths, and background noise. | Minimal to no editing. Re-generate in seconds if a change is needed. |

| Revision | Requires re-recording sessions, adding time and cost. | Instant. Just edit the text and click "generate" again. |

| Cost | Can cost hundreds of dollars per minute for professional talent. | A small fraction of the cost, often part of a monthly subscription. |

The difference is clear. While traditional voiceover has its place, AI narration offers a level of speed and efficiency that's simply unmatched, especially for creators who need to produce content consistently.

Case Study: Scaling a Faceless YouTube Channel with AI Narration

Let's imagine a YouTube channel called "Quick History Facts" that specializes in 60-second educational shorts. The creator, Alex, started by recording the voiceovers himself. This process was a major bottleneck: finding a quiet time, doing multiple takes to get it right, and editing out mistakes took about two hours per video. Production was capped at two videos per week.

Frustrated with the slow pace, Alex switched to an AI narration tool integrated into his video creation platform. This eliminated the recording and editing phases entirely. He could write a script, paste it in, select a warm, authoritative narrator voice, and generate the final audio in under five minutes.

The result?

- Increased Output: Alex went from producing 2 videos per week to 10.

- Audience Growth: The consistent, high-quality audio and daily upload schedule led to a 450% increase in subscribers within three months.

- Higher Engagement: Viewer comments frequently praised the "clear and professional" narration, leading to a significant boost in average watch time and video shares.

This practical example shows how AI voice is more than just a convenience—it's a powerful growth lever for content creators. If you’re curious about this model, our guide on how to start a faceless YouTube channel dives deeper into the strategy.

To really take advantage of modern AI narration, you need the right tools. A good guide to the best Text to Speech (TTS) reader can help you weigh your options. By pairing the right technology with smart scripting, you can create compelling video content faster than ever before.

Finding Your Voice in MotionLaps

It's one thing to understand the tech behind why neural voices sound so real, but it’s another thing entirely to actually put them to work. The right AI narration can take a video from good to unforgettable, but let's be honest—sifting through a sea of voice options is a massive time sink. For creators on a tight schedule, a streamlined workflow isn't just nice to have; it's essential.

That’s where MotionLaps comes in. We’ve built a curated library of best-in-class neural voices right into our video creation tool. By tapping into the powerhouse technology from industry leaders like OpenAI and Google, we’ve done the heavy lifting for you. You don't have to bounce between different apps or deal with clunky integrations.

This all-in-one approach means you can test-drive different voices with your script in real-time. You get to hear exactly how it will sound and feel, making sure the final cut is precisely what you envisioned, all without ever leaving the platform.

As you can see, the interface is designed to be dead simple. It lets you browse and audition AI narrators without getting bogged down in technical settings, so you can focus on what really matters: the creative side of things.

Matching Voice Style to Visuals

The real secret to great narration? Making sure the voice actually fits the visuals. A mismatch between what your audience sees and what they hear is jarring and can completely pull them out of the experience. To help with this, MotionLaps organizes its voices into intuitive categories so you can quickly find the perfect match for your video's mood.

Here are a few of my go-to pairings for common video aesthetics:

- Documentary Narrator: Think deep, clear, and authoritative. This voice is a natural fit for photorealistic or educational content where you need to build trust and add a layer of seriousness.

- Energetic Influencer: This one is all about high energy—upbeat, friendly, and fast-paced. It’s fantastic for grabbing attention in comic book or animation styles, keeping the vibe fun and engaging.

- Calm Storyteller: When you want to pull viewers in, a soft, gentle, and evenly paced voice is perfect. It works beautifully with atmospheric styles like Japanese ink wash or dark fantasy, creating a truly immersive world.

This kind of thoughtful curation helps you make better decisions, faster. If you want to explore more options, have a look at our complete guide to the best AI voiceovers for any project.

The Simple Three-Step Workflow

The real magic of MotionLaps is just how simple it is. The platform boils down the entire process of creating a narrated video into three easy steps that absolutely anyone can follow, no matter their background.

The whole point is to remove creative friction. When you can bring your script, voice, and visuals together in one place, you can go from a basic idea to a professional, polished video in minutes, not hours.

Here’s the entire process:

- Type Your Script: Just write or paste your text directly into the editor.

- Select a Voice: Browse the library and pick the narrator that clicks with your brand and message.

- Generate Your Video: With one click, MotionLaps gets to work, combining your script, voice, and visual style into a video that’s ready to share.

This workflow really shows that the progress in AI narration isn't just about the technology itself—it's about making that tech accessible. It gives every creator the power to find the perfect sound for their story, without any of the old technical headaches.

The Future of Voice and Responsible Creation

The amazing progress we've seen in AI narration is really just scratching the surface. The next evolution is already taking shape, focusing on real-time generation and emotional intelligence. Pretty soon, telling the difference between a human and a machine voice is going to get even trickier.

Think about an AI that can generate speech with just the right emotional tone, instantly. This isn't a sci-fi movie plot; it's the direction we're headed. We're talking about personalized video narrations that can match a viewer's mood or video game characters whose voices shift based on how you play. This is where the rise of AI narration is taking us.

Of course, with this kind of power comes a hefty dose of responsibility. The same tech that can create a warm, helpful assistant can be twisted for harmful purposes. We absolutely have to tackle the big ethical questions now to make sure this technology grows in a healthy, trustworthy way.

Navigating the Ethical Landscape

The biggest red flag right now is voice cloning. Taking someone's voice and creating a digital copy without their direct permission isn't just a grey area—it's a serious ethical violation. The potential for trouble is huge, from faking celebrity endorsements to much more dangerous forms of impersonation.

This ties directly into the problem of deepfakes. AI-generated audio can be used to create recordings that sound completely real but are entirely fake, which is a perfect recipe for spreading misinformation or running sophisticated scams. We've already seen reports where scammers successfully cloned a CEO's voice to get an employee to make a fraudulent money transfer. The risks are very real.

For AI voice to have a positive future, creators and the platforms they use have to commit to doing things the right way.

The bedrock of it all has to be consent. Ethical voice cloning is only possible when the original speaker gives clear, informed permission, with transparent rules about how and where their voice will be used.

Building a Framework of Trust

Thankfully, responsible platforms are already putting safeguards in place to create a safer environment. These steps are crucial to ensure that neural voices sound more human than ever for good reasons, not bad ones.

Here are a few key safeguards:

- Explicit Consent Requirements: Reputable services demand undeniable proof of consent before they'll clone a voice. This is the first line of defense against someone's voice being used without their knowledge.

- Transparency and Disclosure: It's becoming a best practice to clearly label content that's been generated by AI. This kind of honesty helps your audience know what they're hearing and prevents any feelings of deception.

- Watermarking and Detection: Smart people are developing advanced audio watermarking techniques. These act like a digital fingerprint, making it possible to trace synthetic audio back to its source and fight malicious deepfakes.

By making these ethical guardrails a priority, we can keep pushing the creative boundaries of AI narration while protecting people and keeping public trust intact. The future of voice isn't just about sounding human—it's about acting with human values.

Got Questions About AI Narration? We've Got Answers.

As AI narration finds its way into more and more videos, it's natural to have questions. You're probably wondering what it's really like to use, what the catch is, and how to get the best results. Let's clear the air and tackle some of the most common questions creators have.

Can People Actually Tell It's an AI Voice?

Honestly, with the top-tier neural voices available today, most people can't. An audio engineer with a trained ear might be able to pick up on minuscule imperfections under a microscope, but your average viewer scrolling through TikTok or YouTube? Not a chance.

Once you layer that voice over some background music and sync it with compelling visuals, it just… works. The quality has reached a point where the narration feels completely natural, letting your story or message shine without the voice itself becoming a distraction.

Is High-Quality AI Narration Going to Break the Bank?

Not at all. In fact, it's one of its biggest selling points. Think about the cost of hiring a professional voice actor—you're often looking at hundreds of dollars for just one short video. High-quality AI narration completely shatters that financial barrier.

Platforms like MotionLaps give you a whole library of premium neural voices for a simple subscription fee. This means you can pump out unlimited videos for less than what you'd pay a human actor for a single project.

This is a game-changer for solo creators and small teams, finally making it possible to produce a high volume of content that sounds consistently professional without a Hollywood budget.

How Should I Write My Script for an AI Narrator?

Writing for an AI is a little different than writing for a person, but it's easy once you get the hang of it. The main goal is to be crystal clear with your words and punctuation.

- Keep it simple and direct. Long, winding sentences with complex clauses can sometimes confuse the AI’s rhythm. Stick to clear, concise language.

- Use punctuation as your director. Punctuation tells the AI how to perform. A comma is a slight pause, a moment to breathe. A period is a full stop, the end of a thought. Use them intentionally to guide the pacing.

- Read it out loud yourself. This is the single best trick in the book. If a sentence feels awkward or clunky when you say it, the AI is going to struggle with it too. A quick read-through can catch 99% of the issues.

Are There Any Legal Headaches I Should Worry About?

When you're using the stock voices provided by a trusted platform, you're in the clear. These voices are built with ethically sourced data, and your subscription gives you the commercial license to use them in any of your monetized content, worry-free.

The real legal and ethical minefield is voice cloning, which involves creating a digital replica of a specific person's voice. That requires explicit, informed consent from the person being cloned. By sticking with the professionally-made voice library in a tool like MotionLaps, you neatly sidestep all of those complicated issues.

Ready to create compelling videos with lifelike AI narration in minutes? MotionLaps gives you access to a full suite of premium neural voices and a powerful video creation engine. Start producing viral-ready content today at https://motionlaps.ai.